在Python使用Hadoop来处理大量的CSV文件文件、Python、Hadoop、CSV

我有一个巨大的CSV文件,我想使用Hadoop麻preduce在亚马逊EMR(蟒蛇)来处理。

该文件有7场,但是,我只在看日和量字段。

日期,receiptIdproductId参数,量价posIdcashierId

首先,我mapper.py

进口SYS

高清主(argv的):

行= sys.stdin.readline()

尝试:

而行:

表= line.split('\ t')

#如果日期符合标准,加量至前preSS关键

如果INT(名单[0] [11:13])> = 17和INT(名单[0] [11:13])< = 19:

打印%S \ t%s的%(前preSS,INT(名单[3]))

#ELSE,加量不-EX preSS关键

其他:

打印%S \ t%s的%(非-EX preSS,INT(名单[3]))

行= sys.stdin.readline()

除了文件结尾:

返回None

如果__name__ ==__main__:

主(sys.argv中)

有关减速,我将使用流命令:聚合

问:

是我的code吗?我跑了它在亚马逊电子病历,但我有一个空的输出。

所以,我的最终结果应该是:EX preSS,XXX和非EX preSS,YYY。我可以返回结果之前,做一个除法操作? XXX / YYY的只是结果。我应该在哪里把这个code?减速??

此外,这是一个巨大的CSV文件,所以会映射分割成几个分区?或者我需要显式调用FileSplit?如果是这样,我该怎么做呢?

解决方案在这里回答我的问题!

在code是错误的。如果您使用的是总库减少,你的输出不按照通常的键值对。它需要一个preFIX。

如果INT(名单[0] [11:13])> = 17和INT(名单[0] [11:13])< = 19:

#这个是打印的总库的正确方法

#PRINT都作为一个字符串。

打印LongValueSum:+前preSS+\ t+列表[3]

其他prefixes可用的有:DoubleValueSum,LongValueMax,LongValueMin,StringValueMax,StringValueMin,UniqValueCount,ValueHistogram。欲了解更多信息,请看这里http://hadoop.apache.org/common/docs/r0.15.2/api/org/apache/hadoop/ma$p$pd/lib/aggregate/package-summary.html.

是的,如果你想要做的不仅仅是基本的总和,最小值,最大值或计数,你需要编写自己减速。

我还没有答案。

I have a huge CSV file I would like to process using Hadoop MapReduce on Amazon EMR (python).

The file has 7 fields, however, I am only looking at the date and quantity field.

"date" "receiptId" "productId" "quantity" "price" "posId" "cashierId"

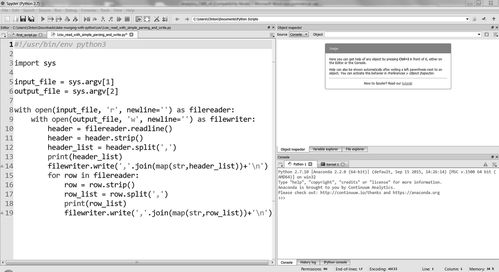

Firstly, my mapper.py

import sys

def main(argv):

line = sys.stdin.readline()

try:

while line:

list = line.split('\t')

#If date meets criteria, add quantity to express key

if int(list[0][11:13])>=17 and int(list[0][11:13])<=19:

print '%s\t%s' % ("Express", int(list[3]))

#Else, add quantity to non-express key

else:

print '%s\t%s' % ("Non-express", int(list[3]))

line = sys.stdin.readline()

except "end of file":

return None

if __name__ == "__main__":

main(sys.argv)

For the reducer, I will be using the streaming command: aggregate.

Question:

Is my code right? I ran it in Amazon EMR but i got an empty output.

So my end result should be: express, XXX and non-express, YYY. Can I have it do a divide operation before returning the result? Just the result of XXX/YYY. Where should i put this code? A reducer??

Also, this is a huge CSV file, so will mapping break it up into a few partitions? Or do I need to explicitly call a FileSplit? If so, how do I do that?

解决方案

Answering my own question here!

The code is wrong. If you're using aggregate library to reduce, your output does not follow the usual key value pair. It requires a "prefix".

if int(list[0][11:13])>=17 and int(list[0][11:13])<=19:

#This is the correct way of printing for aggregate library

#Print all as a string.

print "LongValueSum:" + "Express" + "\t" + list[3]

The other "prefixes" available are: DoubleValueSum, LongValueMax, LongValueMin, StringValueMax, StringValueMin, UniqValueCount, ValueHistogram. For more info, look here http://hadoop.apache.org/common/docs/r0.15.2/api/org/apache/hadoop/mapred/lib/aggregate/package-summary.html.

Yes, if you want to do more than just the basic sum, min, max or count, you need to write your own reducer.

I do not yet have the answer.