上传图片到Amazon S3上传图片、Amazon

我上传图片到amazons3桶,但其采取类似2分钟执行了10幅图像,但我要上传图片的10GB,我应该得到的每个图像的更新升级,确保其工作....

而($文件= readdir的($处理)):

如果(is_file($文件)){

$分机=爆炸(,$文件'。');

$分机= array_pop($分机);

如果(in_array(用strtolower($分机),$ includedExtn)){

或者set_time_limit(0);

如果($ S3-> putObjectFile($文件,$ bucketName,baseName的($文件),S3 :: ACL_PUBLIC_READ)){

回声< BR />中;

回声S3 :: putObjectFile():文件复制到{$ bucketName} /baseName的($文件);

} 其他 {

回声S3 :: putObjectFile():无法复制文件\ N的;

}

}

} 其他 {

回声没有更多的文件左;

}

ENDWHILE;

解决方案

它看起来像你使用pretty的,差不多code我用。该矿速度虽然我通过同时运行同一个脚本(数倍于并联),似乎每个人都戴上可限于得到更快的结果同样的问题,但也有并发看跌期权的数量没有限制(或者,如果有我还没有找到它)。我一般在命令行运行脚本每次6倍。

您只需要编写你的code,以确保它不会上传同一文件超过一次,否则你会承担多项指控为每个文件(所以添加类似细分到数据源)。 I 包括它在多个脚本,每个指定一个唯一的部门应该从数据库访问。

I am uploading images to amazons3 bucket, but its taking like 2 minutes execution for 10 images but I have to upload 10GB of images, I should receive the update for the each image update make sure its working....

while($file=readdir($handle)):

if(is_file($file)){

$extn = explode('.',$file);

$extn = array_pop($extn);

if (in_array(strtolower($extn),$includedExtn)) {

set_time_limit(0);

if ($s3->putObjectFile($file, $bucketName, baseName($file), S3::ACL_PUBLIC_READ)) {

echo "<br/>";

echo "S3::putObjectFile(): File copied to {$bucketName}/".baseName($file);

} else {

echo "S3::putObjectFile(): Failed to copy file\n";

}

}

} else {

echo "No more files left";

}

endwhile;

解决方案

It looks like you're using pretty-much the same code I use. Mine has the same issue of speed though I got faster results by running the same script concurrently (several times in parallel), it seems each put may be limited, but there are no restriction on the number of concurrent PUTs (or if there is I haven't found it yet). I generally run the script 6 times each from the command-line.

You just need to write your code to ensure it doesn't upload the same file more than once or you'll incur multiple charges for each file (so add something like segmentation to your data-source). I include it in several scripts each which specifies a unique sector it should access from the database.

上一篇:如何添加或修改在Amazon S3中现有对象的内容处置?对象、内容、Amazon

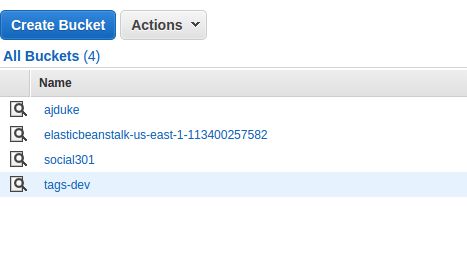

下一篇:宝途S3错误。 BucketAlreadyOwnedByYou错误、宝途、BucketAlreadyOwnedByYou