使用lambda函数来解压缩在S3中的档案确实是sloooooow解压缩、函数、确实、档案

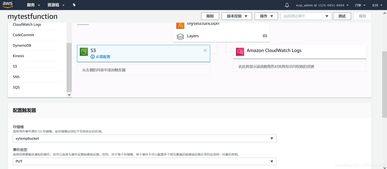

我公司是上传大文件存档到S3,现在希望他们可以在S3上解压缩。我写了基于解压lambda函数,通过一个文件到XXX-ZIP桶,其中流从S3的压缩文件,解压流,然后流的各个文件到XXX数据桶的到达而触发。

它的工作原理,但我觉得它是慢得多比我预期 - 即使是在一个测试文件,压缩大小约为500K,手持约500文件,这是超时为60秒超时设置。这看起来对吗?在我与节点运行的本地系统是比这更快。在我看来,由于文件被移动内部亚马逊的云潜伏期要短,并且由于文件正在流采取应约需要解压缩数据流时的实际时间。

有一种内在的原因,这是不行的,或者是有什么在我的code,是造成它是如此之慢?这是我第一次用Node.js的工作,所以我可以把事情搞糟。还是有更好的方法来做到这一点,我无法找到谷歌?

这里是code纲要(BufferStream是一个类,我写了一个包装由s3.getObject()返回到readStream缓冲)

VAR AWS =需要('AWS-SDK);

VAR S3 =新aws.S3({apiVersion:'2006-03-01'});

VAR解压=要求(解压缩);

VAR流=需要('流');

VAR UTIL =要求(UTIL);

变种FS =要求(FS);

exports.handler =函数(事件上下文){

VAR zip文件= event.Records [0] .s3.object.key;

s3.getObject({斗:SOURCE_BUCKET,重点:压缩文件},

功能(ERR,数据){

变种误差= 0;

无功总= 0;

变种成功= 0;

变种活性= 0;

如果(ERR){

的console.log(错误:+ ERR);

}

其他 {

的console.log('收到的zip文件+压缩文件);

新BufferStream(data.Body)

.pipe(unzip.Parse())。在('入口',功能(输入){

总++;

VAR文件名= entry.path;

VAR IN_PROCESS ='('+ +主动+过程);

的console.log('提取'+ entry.type +''+文件名+ IN_PROCESS);

s3.upload({斗:DEST_BUCKET,重点:文件名,身体:进入},{},

功能(ERR,数据){

VAR剩余='('+ --active +剩下的)';

如果(ERR){

//如果由于某种原因文件不是只读抛弃它

错误++

的console.log('错误推+文件名+到S3'+剩余+:+ ERR);

entry.autodrain();

}

其他 {

成功++;

的console.log('成功地写了+文件名+到S3'+剩余);

}

});

});

的console.log(已完成,+总+文件处理+成功+'写入到S3,'+误差+'失败');

context.done(空,'');

}

}

);

}

解决方案

我怀疑你使用的解压缩模块是一个JavaScript实现,可以让你解压缩zip文件 - 这是非常缓慢的。

我建议使用gzip来COM preSS文件 并使用内部 zlib的的为C编译和库应该提供更好的性能。

如果你选择继续使用拉链,可以与亚马逊的支持,并要求对您的lambda表达式增加60秒限制。

My company is uploading large archive files to S3, and now wants them to be unzipped on S3. I wrote a lambda function based on unzip, triggered by arrival of a file to the xxx-zip bucket, which streams the zip file from S3, unzips the stream, and then streams the individual files to the xxx-data bucket.

It works, but I find it is much slower than I expect - even on a test file, zip size about 500k and holding around 500 files, this is timing out with a 60 second timeout set. Does this seem right? On my local system running with node it is faster than this. It seems to me that since files are being moved inside Amazon's cloud latency should be short, and since the files are being streamed the actual time taken should be about the time it takes to unzip the stream.

Is there an inherent reason why this won't work, or is there something in my code that is causing it to be so slow? It is the first time I've worked with node.js so I could be doing something badly. Or is there a better way to do this that I couldn't find with google?

Here is an outline of the code (BufferStream is a class I wrote that wraps the Buffer returned by s3.getObject() into a readStream)

var aws = require('aws-sdk');

var s3 = new aws.S3({apiVersion: '2006-03-01'});

var unzip = require('unzip');

var stream = require('stream');

var util = require( "util" );

var fs = require('fs');

exports.handler = function(event, context) {

var zipfile = event.Records[0].s3.object.key;

s3.getObject({Bucket:SOURCE_BUCKET, Key:zipfile},

function(err, data) {

var errors = 0;

var total = 0;

var successful = 0;

var active = 0;

if (err) {

console.log('error: ' + err);

}

else {

console.log('Received zip file ' + zipfile);

new BufferStream(data.Body)

.pipe(unzip.Parse()).on('entry', function(entry) {

total++;

var filename = entry.path;

var in_process = ' (' + ++active + ' in process)';

console.log('extracting ' + entry.type + ' ' + filename + in_process );

s3.upload({Bucket:DEST_BUCKET, Key: filename, Body: entry}, {},

function(err, data) {

var remaining = ' (' + --active + ' remaining)';

if (err) {

// if for any reason the file is not read discard it

errors++

console.log('Error pushing ' + filename + ' to S3' + remaining + ': ' + err);

entry.autodrain();

}

else {

successful++;

console.log('successfully wrote ' + filename + ' to S3' + remaining);

}

});

});

console.log('Completed, ' + total + ' files processed, ' + successful + ' written to S3, ' + errors + ' failed');

context.done(null, '');

}

}

);

}

解决方案

I suspect that the unzip module you are using is a JavaScript implementation that allows you to unzip zip files - which is very slow.

I recommend using gzip to compress the files and using the internal zlib library that is C compiled and should provide much better performance.

In case you choose to stick with zip, you could contact amazon support and ask to increase the 60 seconds limit on your lambda function.