为什么数组大小必须是3 ^ K + 1周期领导人迭代算法来工作吗?数组、算法、领导人、周期

借助周期领袖迭代算法是一个算法用于通过移动所有偶数项的前部和所有奇数项到后面,同时preserving它们的相对次序混洗的阵列。例如,给定该输入:

A 1 B 2ç3天4 E 5

输出将

A B C D E 1 2 3 4 5

这个算法运行在O(n)的时间,并且只使用O(1)空间。

该算法的一个不寻常的细节是,它通过把阵列分成大小3 K +1块。显然,这是至关重要的,该算法能够正常工作,但我不知道这是为什么。

为什么是3选择 K + 1所需的算法?

谢谢!

解决方案

这将是一个的长的答案。在回答你的问题并不简单,而且需要一些数论全面解答。我花了大约通过算法半天工作,我现在有一个很好的答案,但我不知道我可以形容它简洁。

的短版:

打破了投入的大小3块 K + 1本质上打破了输入分割成大小为3块 K - 1由两个元素做包围最终不会移动。

其余3 K - 在块移动1元按一个有趣的模式:每个元素移至通过将指标给定的两个模3位 K 。

这个特殊的运动模式连接,从数论和群论的一个概念叫做原根。

由于两个数是原始根模3 K ,开始用数字1,3,9,27等,和运行模式是通过保证周期的所有数组恰好一次的元素,并把它们放到适当的位置。

此图案是高度依赖于一个事实,即图2是一个原始根3 K 对任意k&格; 1.改变阵列的大小为另一个值几乎肯定会打破这个,因为错误的属性是preserved

长版

要present这个答案,我会按步骤继续进行。首先,我要介绍周期分解为动机的算法,将有效地洗牌体周围以正确的顺序,如有一个重要的警告。接下来,我要指出的元素是如何发生的,当你应用此置换数组中走动一个有趣的属性。然后,我将其连接至一个数论的概念叫原根来解释所涉及的挑战正确地执行这一算法。最后,我将解释为什么这导致了选择3 K + 1的块大小。

周期分解

让我们假设你有一个数组A和数组的元素的置换。根据标准的数学符号,我们将表示该阵列的排列为:西格玛;(A)。我们可以行初始数组A上排列的阵列和六西格玛的顶部;(A)得到一个意义,每一个元素结束了。例如,这里是一个数组和它的排列之一:

A 0 1 2 3 4

σ(A)2 3 0 4 1

这是我们可以描述一个置换的方法之一就是列出关闭该置换内部的新元素。然而,从算法的角度来看,它往往更有助于重新present置换为周期分解,的方式写出的置换通过显示如何通过开始与初始数组,然后周期性地置换其部分元素以形成排列的

看看上述置换。首先,看一下0结束的地方。在&西格马;(A)中,元素0最终采取的其中使用的元件2到的地方。反过来,元件2最终采取的其中所用的元素0至的地方。我们称这个写(0 2),这表明0应该去的地方2曾经是,和2应该是0曾经是。

现在,看看元素1元1结束,其中4曾经是。数字4然后结束了其中3曾经是,和元件3结束了其中1曾经是。我们称这个写(1 4 3),即1应该去的地方4曾经是,这4应该去的地方3曾经是,而且3应该去的地方1曾经是。

结合这些在一起,我们可以重新present为(0 2)(1 4 3)上述元素的整体排列 - 我们应该交换0和2,然后循环置换1,4,和3。如果我们这样做首先是初始阵列,我们最终会在我们需要的置换阵。

周期分解是用于置换阵列的地方,因为它有可能重排任何单个周期O(C)的时间和O(1)辅助空间,其中C是在周期元素的数量非常有用。例如,假设你有一个周期(1 6 8 4 2)。您可以重排与code这样的循环中的元素:

INT []周期= {1,6,8,4,2};

INT TEMP =阵列[周期[0];

的for(int i = 1; I< cycle.length;我++){

掉期(温度,数组[周期[I]);

}

阵列[周期[0] =气温;

这工作由刚换周围的一切,直到一切都休息。除了存储周期本身所需的空间使用时,只需要O(1)辅助存储空间。

在一般情况下,如果要设计出适用的特定置换来元件的阵列的算法,通常可以通过使用循环分解这样做。一般的算法如下:

有关(在循环分解算法每个周期){ 应用上述算法,以循环那些元件; }

这个算法的整体时间和空间复杂度取决于以下因素:

我们如何能迅速决定我们想要的周期分解? 我们如何能够有效地存储在内存中?这个周期分解要获得为O(n) - 时间,O(1)空间算法手头的问题,我们要表明,有一种方法来确定O(1)时间和空间周期分解。既然一切都将被移动只出现一次,整体运行将是O(n)和整体空间复杂度将是O(1)。这并不容易到那里,你将看到的,但话又说回来,这是不是可怕的两种。

的排列结构

这个问题的首要目标是把2n个元素的数组,并打乱它,这样即使是定位的元素最终在阵列的前面,奇数定位的元素最终在数组的末尾。现在,让我们假设的,我们有14个元素,像这样的:

0 1 1 2 3 4 5 6 7 8 9 10 11 12 13

我们要洗牌的元素,让他们出来是这样的:

0 2 4 6 8 10 12 1 3 5 7 9 11 13

有几个有用的意见,我们可以有关于这个置换产生的方式。首先,请注意的第一个元素不动在此置换,因为即使索引元素都应该出现在阵列的前面,这是第偶数索引的元素。接下来,注意的最后一个元素不会移动在此置换,因为奇数索引的元素都应该结束了在阵列的后面,这是最后的奇次元。

这些两个观察,放在一起,意味着,如果我们想重排在所需方式的阵列的元件,我们实际上只需要重排由与第一和最后一个元素的整体阵列的子阵列掉落。因此,展望未来,我们纯粹将专注于置换中间元素的问题。如果我们能够解决这个问题,我们已经解决了整个问题。

现在,让我们来看看数组的只是中间的元素。从我们上面的例子中,这意味着我们要开始与像这样的数组:

元素1 2 3 4 5 6 7 8 9 10 11 12

指数1 2 3 4 5 6 7 8 9 10 11 12

我们想要得到的数组是这样的:

元素2 4 6 8 10 12 1 3 5 7 9 11

指数1 2 3 4 5 6 7 8 9 10 11 12

由于该阵列通过采取0索引数组,然后斩去的第一个和最后元件构成,我们可以把它当作一个一索引数组。这将是非常重要的前进,所以一定要记住这一点。

那么究竟如何才能去产生这种排列?嗯,首先,它不会伤害看看每个元素,并试图找出在那里开始并在它结束了。如果我们这样做,我们可以写东西出来是这样的:

在位置1的元素结束了在第7位。 在第2位的元素结束了在位置1。 在第3位的元素结束了第8位。 在第4位的元素结束了第2位。 在第5位的元素结束了第9位。 在第6位的元件,结束了在3位上。 在位置7元结束了在第10位。 在位置8元结束了第4位。 在第9位的元素结束了在第11位。 在第10位的元素结束了5位。 在11位的元素结束了在12位。 在12位的元素结束了在第6位。如果你看一下这个列表中,可以发现一些模式。首先,请注意,所有的偶数元件的最后索引始终该元素的一半的位置。例如,在4位上的元素结束了在第2位,在6位等最终位置12的元件这是有道理 - 我们推元件的所有偶数元素阵列的前面,这样一半来之前他们将流离失所,移出的方式。

现在,怎么样奇数元素呢?那么,有总共12个元素。各奇数编号的元件被推到第二半,所以在位置2K + 1的奇数元件将得到内的第二一半是由k值给定的推到至少位置7.及其位置。因此,在一个奇怪的位置2K + 1的元素被映射到位置7 + K。

我们需要一分钟来概括这种想法。假设我们正在置换数组的长度为2n。在位置2倍的元件将被映射到位置X(再次,偶数得到减半了),并且在位置2×+ 1的元素将被映射到位置n + 1 + X。重申这一点:

的元素的位置p处的最终位置被确定为如下:

如果P = 2X一些整数x,然后2个↦X 如果P = 2X + 1的某个整数x,则2X + 1↦N + 1 + X现在,我们要做的东西是完全疯狂和意外。现在,我们已经确定,其中每个元素结束了分段规则:我们要么除以2,还是我们做一些奇怪的涉及N + 1。然而,从数论的角度来看,有一个单一,统一的规则解释,所有的元素都应该结束了。

我们需要的观点是,在这两种情况下,这似乎是,在某些方面,我们除以该指数由两个。对于连的情况下,新的索引真的是仅仅由两个分割而形成的。对于奇数的情况下,新的索引的有点的看起来像它可以通过在两个分割形成的(注意,2X + 1到X +(N + 1)),但有一个额外的长期在那里。在一个数论感,不过,这两种真由两个对应分裂。这里的原因。

而不是由两个取的来源的指数和分的得到的目标的指数,如果我们采取什么样的的目标的指数和乘的由两个?如果我们这样做,一个有趣的现象出现了。

假设我们的原来的号码是2倍。该目的地则X,如果我们加倍目标索引拿回2倍,我们最终与源指标。

现在假设我们原来的号码是2倍+ 1。目的地是,则n + 1 + X。现在,如果我们加倍的目标索引会发生什么?如果我们这样做,我们得到2N + 2 + 2倍。如果我们重新排列,我们可以可替换地重写这个作为(2×+ 1)+(2n + 1个)。换句话说,我们已经得到了回原来的指数,加上一个额外的(2N + 1)项。

现在的踢球者:如果我们所有的运算完成模2N + 1 的?在这种情况下,如果我们的原始数为2×+ 1,然后两次目标索引是(2×+ 1)+(2N + 1)= 2×1 +(模2N + 1)。换句话说,目标指数真的是一半的来源指数,刚刚做模2N + 1!

这使我们非常,非常有趣的见解:每一个2N个元素的数组元素的最终目的是通过把这个数字给出二,模2N + 1 的。这意味着,真的是一个很好的,统一的规则来确定在何处事事顺心。我们只需要能够由两个模2n + 1个来划分。这恰好是制定出在偶数的情况下,这是正常的整数除法,而在奇数的情况下,它的作品了采取的形式N + 1 + X。

因此,我们可以重新构建我们的问题通过以下方式:给出2n个元素的1索引的数组,我们怎么重排的元素,使这原本是在索引中的每一元素X结束了在位置x / 2 MOD(的2n + 1)λ

周期分解再访

在这一点上,我们已经取得了很多的进步。鉴于任何元素,我们知道该元素应该结束了。如果我们能找出一个很好的方式来获得整体置换的周期分解,我们就大功告成了。

这是不幸的是,在事情变得复杂。假设,例如,我们的阵列具有10个元素。在这种情况下,我们要转换数组是这样的:

初始:1 2 3 4 5 6 7 8 9 10

决赛:2 4 6 8 10 1 3 5 7 9

该置换的周期分解为(1 6 3 7 9 10 5 8 4 2)。如果我们的阵列有12个元素,我们要改变这样的:

初始:1 2 3 4 5 6 7 8 9 10 11 12

决赛:2 4 6 8 10 12 1 3 5 7 9 11

这有循环分解(1 10 7 5 9 11 12 6 3 8 4 2 1)。如果我们的阵列有14个元素,我们要改变这样的:

初始:1 2 3 4 5 6 7 8 9 10 11 12 13 14

决赛:2 4 6 8 10 12 14 1 3 5 7 9 11 13

这有循环分解(1 8 4 2)(3 9 12 6)(5 10)(7 11 13 14)。如果我们的阵列有16个元素,我们要改变这样的:

初始:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

决赛:2 4 6 8 10 12 14 16 1 3 5 7 9 11 13 15

这有循环分解(1 9 13 15 16 8 4 2)(3 10 5 11 14 7 12 6)。

这里的问题是,这些周期似乎并不遵循任何predictable模式。这是,如果我们要试图解决在O(1)空间和O(n)时间这个问题的一个现实问题。即使给予任何单个元素,我们可以找出周期中含有它,我们可以有效地洗牌的周期,现在还不清楚我们如何找出什么元素属于何种周期,有多少不同的周期有,等等。

原根

这就是数论进来,记住,每个元素的新位置,除以这个数字由二,模2N + 1而形成的。思考这个倒退,我们可以计算出哪个数字将每个数字的地方通过的乘法的两模2N + 1。因此,我们可以认为这个问题通过寻找反周期分解:我们挑选了一些,保持它由两个通过改装2N + 1相乘,然后重复,直到我们的周期就完成了

这产生了一个充分研究的问题。假设我们开始与数k和思考的序列K,2K,2 2 K,2 3 K,2 4 K,等等,都做模2N + 1。这样做可以使这取决于奇数2N + 1你改装不同的模式。这就解释了为什么上述循环模式似乎有些武断。

我不知道怎么会有人想通了这一点,但事实证明,有从数论中一个美丽的结果有关,如果你把这个模式会发生什么谈判mod3 K 对于一些数k:

定理:考虑序列3 取值 3 取值· 2,3 取值· 2 2 3 取值· 2 3 3 取值· 2 4 等所有的模3 K 一段K≥秒。这个顺序循环穿过每一个数字1和3之间 K ,包容性的,也就是被3整除取值而不是由3整除 S + 1

我们可以尝试这一点上的几个例子。让我们携手模27 = 3 2 。该定理说,如果我们看一下3,3· 2,第3 middot; 4,等所有的模27,那么我们应该看到所有的数字小于27是由3整除,而不是由9整除嘛,let'see我们得到什么:

3· 2 0 = 3· 1 = 3 = 3模27 3· 2 1 = 3· 2 = 6 = 6mod27 3· 2 2 = 3· 4 = 12 = 12模27 3· 2 3 = 3· 8 = 24 = 24模27 3· 2 4 = 3· 16 = 48 = 21模27 3· 2 5 = 3· 32 = 96 = 15模27 3· 2 6 = 3· 64 = 192 = 3模27我们最终看到3,6,12,15,21,和24(虽然不是以该顺序),这是确实的所有号码小于27是由3整除而不是由9整除。

我们还可以尝试这工作模27,并考虑1,2,2 2 2 3 2 4 模27,及我们应该看到所有小于27是整除1,不整除3换句话说,这应该归还所有小于27的不整除3。让我们来看看,如果这是真的数字的数字:

2 0 = 1 = 1模27 2 1 = 2 = 2模27 2 2 = 4 = 4 MOD 27 2 3 = 8 = 8模27 2 4 = 16 = 16模27 2 5 = 32 = 5模27 2 6 = 64 = 10模27 2 7 = 128 = 20模27 2 8 = 256 = 13模27 2 9 = 512 = 26模27 2 10 = 1024 = 25模27 2 11 = 2048 = 23模27 2 12 = 4096 = 19模27 2 13 = 8192 = 11模27 2 14 = 16384 = 22模27 2 15 = 32768 = 17模27 2 16 = 65536 = 7模27 2 17 = 131072 = 14模27 2 18 = 262144 = 1模27依这些,我们回到数字1,2,4,5,7,8,10,11,13,14,16,17,19,20,22,23,25,26(虽然不是在这个顺序)。这是的完全的是不是3的倍数1和26之间的数字!

此定理是至关重要的算法,原因如下:如果2N + 1 = 3 K 对于一些数目k,然后,如果我们处理包含1周期,它将适当地打乱所有号码那是不是三的倍数。如果我们再开始循环3,将适当洗牌是被3整除但不能被9的所有数字。如果我们再开始循环在9,它会正常洗牌是整除9的所有数字,但不是27。更一般地,如果我们用循环洗牌算法上的数字1,3,9,27,81,等等,那么我们会适当地重新定位阵列中的所有元素恰好一次,不会有后顾之忧,我们错过了什么。

那么,这怎样连接到3 K + 1?好了,我们需要有2N + 1 = 3 K ,所以我们需要有2N = 3 K - 1。但是,请记住 - 我们放弃了第一个和当我们这样做数组的最后元素!添加这些早在告诉我们,我们需要大小的块 3 K + 1 在此过程中能够正常工作。如果块这个尺寸,那么我们就知道的的某些的是,周期分解由包含1一个周期,一个周期不重叠含有3,含有9等不重叠的周期,这些周期会包含该数组中的所有元素。因此,我们可以开始骑自行车1,3,9,27,等,并为绝对保证的一切都被正确地抛去。这是惊人的!

为什么是这个定理真的吗?事实证明,一个数k为这1中,k 2 ,K 3 等模p N 的循环通过所有的是不是p的倍数数目(假定p是素数)被称为的一个原始根的数p N 。有一个定理,指出2是3的原根 K 的所有数字K,这就是为什么这招的作品。如果我有时间,我想回来,编辑这个答案,包括这个结果的证明,但遗憾的是我的数论是不是在一个水平,我知道如何做到这一点。

摘要

这个问题是吨的乐趣去努力。它涉及到可爱的技巧与除以二模的奇数,周期分解,原根,和三个权力。我很感谢它描述了类似的(虽然是很不同的)算法,该文的arXiv 并给了我从某种意义上说对技术背后的关键诀窍,然后让我工作的细节为您所描述的算法。

希望这有助于!

The cycle leader iteration algorithm is an algorithm for shuffling an array by moving all even-numbered entries to the front and all odd-numbered entries to the back while preserving their relative order. For example, given this input:

a 1 b 2 c 3 d 4 e 5

the output would be

a b c d e 1 2 3 4 5

This algorithm runs in O(n) time and uses only O(1) space.

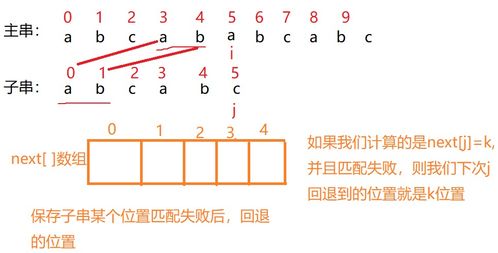

One unusual detail of the algorithm is that it works by splitting the array up into blocks of size 3k+1. Apparently this is critical for the algorithm to work correctly, but I have no idea why this is.

Why is the choice of 3k + 1 necessary in the algorithm?

Thanks!

解决方案This is going to be a long answer. The answer to your question isn't simple and requires some number theory to fully answer. I've spent about half a day working through the algorithm and I now have a good answer, but I'm not sure I can describe it succinctly.

The short version:

Breaking the input into blocks of size 3k + 1 essentially breaks the input apart into blocks of size 3k - 1 surrounded by two elements that do not end up moving.

The remaining 3k - 1 elements in the block move according to an interesting pattern: each element moves to the position given by dividing the index by two modulo 3k.

This particular motion pattern is connected to a concept from number theory and group theory called primitive roots.

Because the number two is a primitive root modulo 3k, beginning with the numbers 1, 3, 9, 27, etc. and running the pattern is guaranteed to cycle through all the elements of the array exactly once and put them into the proper place.

This pattern is highly dependent on the fact that 2 is a primitive root of 3k for any k ≥ 1. Changing the size of the array to another value will almost certainly break this because the wrong property is preserved.

The Long Version

To present this answer, I'm going to proceed in steps. First, I'm going to introduce cycle decompositions as a motivation for an algorithm that will efficiently shuffle the elements around in the right order, subject to an important caveat. Next, I'm going to point out an interesting property of how the elements happen to move around in the array when you apply this permutation. Then, I'll connect this to a number-theoretic concept called primitive roots to explain the challenges involved in implementing this algorithm correctly. Finally, I'll explain why this leads to the choice of 3k + 1 as the block size.

Cycle Decompositions

Let's suppose that you have an array A and a permutation of the elements of that array. Following the standard mathematical notation, we'll denote the permutation of that array as σ(A). We can line the initial array A up on top of the permuted array σ(A) to get a sense for where every element ended up. For example, here's an array and one of its permutations:

A 0 1 2 3 4

σ(A) 2 3 0 4 1

One way that we can describe a permutation is just to list off the new elements inside that permutation. However, from an algorithmic perspective, it's often more helpful to represent the permutation as a cycle decomposition, a way of writing out a permutation by showing how to form that permutation by beginning with the initial array and then cyclically permuting some of its elements.

Take a look at the above permutation. First, look at where the 0 ended up. In σ(A), the element 0 ended up taking the place of where the element 2 used to be. In turn, the element 2 ended up taking the place of where the element 0 used to be. We denote this by writing (0 2), indicating that 0 should go where 2 used to be, and 2 should go were 0 used to be.

Now, look at the element 1. The element 1 ended up where 4 used to be. The number 4 then ended up where 3 used to be, and the element 3 ended up where 1 used to be. We denote this by writing (1 4 3), that 1 should go where 4 used to be, that 4 should go where 3 used to be, and that 3 should go where 1 used to be.

Combining these together, we can represent the overall permutation of the above elements as (0 2)(1 4 3) - we should swap 0 and 2, then cyclically permute 1, 4, and 3. If we do that starting with the initial array, we'll end up at the permuted array that we want.

Cycle decompositions are extremely useful for permuting arrays in place because it's possible to permute any individual cycle in O(C) time and O(1) auxiliary space, where C is the number of elements in the cycle. For example, suppose that you have a cycle (1 6 8 4 2). You can permute the elements in the cycle with code like this:

int[] cycle = {1, 6, 8, 4, 2};

int temp = array[cycle[0]];

for (int i = 1; i < cycle.length; i++) {

swap(temp, array[cycle[i]]);

}

array[cycle[0]] = temp;

This works by just swapping everything around until everything comes to rest. Aside from the space usage required to store the cycle itself, it only needs O(1) auxiliary storage space.

In general, if you want to design an algorithm that applies a particular permutation to an array of elements, you can usually do so by using cycle decompositions. The general algorithm is the following:

for (each cycle in the cycle decomposition algorithm) { apply the above algorithm to cycle those elements; }

The overall time and space complexity for this algorithm depends on the following:

How quickly can we determine the cycle decomposition we want? How efficiently can we store that cycle decomposition in memory?To get an O(n)-time, O(1)-space algorithm for the problem at hand, we're going to show that there's a way to determine the cycle decomposition in O(1) time and space. Since everything will get moved exactly once, the overall runtime will be O(n) and the overall space complexity will be O(1). It's not easy to get there, as you'll see, but then again, it's not awful either.

The Permutation Structure

The overarching goal of this problem is to take an array of 2n elements and shuffle it so that even-positioned elements end up at the front of the array and odd-positioned elements end up at the end of the array. Let's suppose for now that we have 14 elements, like this:

0 1 2 3 4 5 6 7 8 9 10 11 12 13

We want to shuffle the elements so that they come out like this:

0 2 4 6 8 10 12 1 3 5 7 9 11 13

There are a couple of useful observations we can have about the way that this permutation arises. First, notice that the first element does not move in this permutation, because even-indexed elements are supposed to show up in the front of the array and it's the first even-indexed element. Next, notice that the last element does not move in this permutation, because odd-indexed elements are supposed to end up at the back of the array and it's the last odd-indexed element.

These two observations, put together, means that if we want to permute the elements of the array in the desired fashion, we actually only need to permute the subarray consisting of the overall array with the first and last elements dropped off. Therefore, going forward, we are purely going to focus on the problem of permuting the middle elements. If we can solve that problem, then we've solved the overall problem.

Now, let's look at just the middle elements of the array. From our above example, that means that we're going to start with an array like this one:

Element 1 2 3 4 5 6 7 8 9 10 11 12

Index 1 2 3 4 5 6 7 8 9 10 11 12

We want to get the array to look like this:

Element 2 4 6 8 10 12 1 3 5 7 9 11

Index 1 2 3 4 5 6 7 8 9 10 11 12

Because this array was formed by taking a 0-indexed array and chopping off the very first and very last element, we can treat this as a one-indexed array. That's going to be critically important going forward, so be sure to keep that in mind.

So how exactly can we go about generating this permutation? Well, for starters, it doesn't hurt to take a look at each element and to try to figure out where it began and where it ended up. If we do so, we can write things out like this:

The element at position 1 ended up at position 7. The element at position 2 ended up at position 1. The element at position 3 ended up at position 8. The element at position 4 ended up at position 2. The element at position 5 ended up at position 9. The element at position 6 ended up at position 3. The element at position 7 ended up at position 10. The element at position 8 ended up at position 4. The element at position 9 ended up at position 11. The element at position 10 ended up at position 5. The element at position 11 ended up at position 12. The element at position 12 ended up at position 6.If you look at this list, you can spot a few patterns. First, notice that the final index of all the even-numbered elements is always half the position of that element. For example, the element at position 4 ended up at position 2, the element at position 12 ended up at position 6, etc. This makes sense - we pushed all the even elements to the front of the array, so half of the elements that came before them will have been displaced and moved out of the way.

Now, what about the odd-numbered elements? Well, there are 12 total elements. Each odd-numbered element gets pushed to the second half, so an odd-numbered element at position 2k+1 will get pushed to at least position 7. Its position within the second half is given by the value of k. Therefore, the elements at an odd position 2k+1 gets mapped to position 7 + k.

We can take a minute to generalize this idea. Suppose that the array we're permuting has length 2n. An element at position 2x will be mapped to position x (again, even numbers get halfed), and an element at position 2x+1 will be mapped to position n + 1 + x. Restating this:

The final position of an element at position p is determined as follows:

If p = 2x for some integer x, then 2x ↦ x If p = 2x+1 for some integer x, then 2x+1 ↦ n + 1 + xAnd now we're going to do something that's entirely crazy and unexpected. Right now, we have a piecewise rule for determining where each element ends up: we either divide by two, or we do something weird involving n + 1. However, from a number-theoretic perspective, there is a single, unified rule explaining where all elements are supposed to end up.

The insight we need is that in both cases, it seems like, in some way, we're dividing the index by two. For the even case, the new index really is formed by just dividing by two. For the odd case, the new index kinda looks like it's formed by dividing by two (notice that 2x+1 went to x + (n + 1)), but there's an extra term in there. In a number-theoretic sense, though, both of these really correspond to division by two. Here's why.

Rather than taking the source index and dividing by two to get the destination index, what if we take the destination index and multiply by two? If we do that, an interesting pattern emerges.

Suppose our original number was 2x. The destination is then x, and if we double the destination index to get back 2x, we end up with the source index.

Now suppose that our original number was 2x+1. The destination is then n + 1 + x. Now, what happens if we double the destination index? If we do that, we get back 2n + 2 + 2x. If we rearrange this, we can alternatively rewrite this as (2x+1) + (2n+1). In other words, we've gotten back the original index, plus an extra (2n+1) term.

Now for the kicker: what if all of our arithmetic is done modulo 2n + 1? In that case, if our original number was 2x + 1, then twice the destination index is (2x+1) + (2n+1) = 2x + 1 (modulo 2n+1). In other words, the destination index really is half of the source index, just done modulo 2n+1!

This leads us to a very, very interesting insight: the ultimate destination of each of the elements in a 2n-element array is given by dividing that number by two, modulo 2n+1. This means that there really is a nice, unified rule for determining where everything goes. We just need to be able to divide by two modulo 2n+1. It just happens to work out that in the even case, this is normal integer division, and in the odd case, it works out to taking the form n + 1 + x.

Consequently, we can reframe our problem in the following way: given a 1-indexed array of 2n elements, how do we permute the elements so that each element that was originally at index x ends up at position x/2 mod (2n+1)?

Cycle Decompositions Revisited

At this point, we've made quite a lot of progress. Given any element, we know where that element should end up. If we can figure out a nice way to get a cycle decomposition of the overall permutation, we're done.

This is, unfortunately, where things get complicated. Suppose, for example, that our array has 10 elements. In that case, we want to transform the array like this:

Initial: 1 2 3 4 5 6 7 8 9 10

Final: 2 4 6 8 10 1 3 5 7 9

The cycle decomposition of this permutation is (1 6 3 7 9 10 5 8 4 2). If our array has 12 elements, we want to transform it like this:

Initial: 1 2 3 4 5 6 7 8 9 10 11 12

Final: 2 4 6 8 10 12 1 3 5 7 9 11

This has cycle decomposition (1 7 10 5 9 11 12 6 3 8 4 2 1). If our array has 14 elements, we want to transform it like this:

Initial: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Final: 2 4 6 8 10 12 14 1 3 5 7 9 11 13

This has cycle decomposition (1 8 4 2)(3 9 12 6)(5 10)(7 11 13 14). If our array has 16 elements, we want to transform it like this:

Initial: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

Final: 2 4 6 8 10 12 14 16 1 3 5 7 9 11 13 15

This has cycle decomposition (1 9 13 15 16 8 4 2)(3 10 5 11 14 7 12 6).

The problem here is that these cycles don't seem to follow any predictable patterns. This is a real problem if we're going to try to solve this problem in O(1) space and O(n) time. Even though given any individual element we can figure out what cycle contains it and we can efficiently shuffle that cycle, it's not clear how we figure out what elements belong to what cycles, how many different cycles there are, etc.

Primitive Roots

This is where number theory comes in. Remember that each element's new position is formed by dividing that number by two, modulo 2n+1. Thinking about this backwards, we can figure out which number will take the place of each number by multiplying by two modulo 2n+1. Therefore, we can think of this problem by finding the cycle decomposition in reverse: we pick a number, keep multiplying it by two and modding by 2n+1, and repeat until we're done with the cycle.

This gives rise to a well-studied problem. Suppose that we start with the number k and think about the sequence k, 2k, 22k, 23k, 24k, etc., all done modulo 2n+1. Doing this gives different patterns depending on what odd number 2n+1 you're modding by. This explains why the above cycle patterns seem somewhat arbitrary.

I have no idea how anyone figured this out, but it turns out that there's a beautiful result from number theory that talks about what happens if you take this pattern mod 3k for some number k:

Theorem: Consider the sequence 3s, 3s·2, 3s·22, 3s·23, 3s·24, etc. all modulo 3k for some k ≥ s. This sequence cycles through through every number between 1 and 3k, inclusive, that is divisible by 3s but not divisible by 3s+1.

We can try this out on a few examples. Let's work modulo 27 = 32. The theorem says that if we look at 3, 3 · 2, 3 · 4, etc. all modulo 27, then we should see all the numbers less than 27 that are divisible by 3 and not divisible by 9. Well, let'see what we get:

3 · 20 = 3 · 1 = 3 = 3 mod 27 3 · 21 = 3 · 2 = 6 = 6 mod 27 3 · 22 = 3 · 4 = 12 = 12 mod 27 3 · 23 = 3 · 8 = 24 = 24 mod 27 3 · 24 = 3 · 16 = 48 = 21 mod 27 3 · 25 = 3 · 32 = 96 = 15 mod 27 3 · 26 = 3 · 64 = 192 = 3 mod 27We ended up seeing 3, 6, 12, 15, 21, and 24 (though not in that order), which are indeed all the numbers less than 27 that are divisible by 3 but not divisible by 9.

We can also try this working mod 27 and considering 1, 2, 22, 23, 24 mod 27, and we should see all the numbers less than 27 that are divisible by 1 and not divisible by 3. In other words, this should give back all the numbers less than 27 that aren't divisible by 3. Let's see if that's true:

20 = 1 = 1 mod 27 21 = 2 = 2 mod 27 22 = 4 = 4 mod 27 23 = 8 = 8 mod 27 24 = 16 = 16 mod 27 25 = 32 = 5 mod 27 26 = 64 = 10 mod 27 27 = 128 = 20 mod 27 28 = 256 = 13 mod 27 29 = 512 = 26 mod 27 210 = 1024 = 25 mod 27 211 = 2048 = 23 mod 27 212 = 4096 = 19 mod 27 213 = 8192 = 11 mod 27 214 = 16384 = 22 mod 27 215 = 32768 = 17 mod 27 216 = 65536 = 7 mod 27 217 = 131072 = 14 mod 27 218 = 262144 = 1 mod 27Sorting these, we got back the numbers 1, 2, 4, 5, 7, 8, 10, 11, 13, 14, 16, 17, 19, 20, 22, 23, 25, 26 (though not in that order). These are exactly the numbers between 1 and 26 that aren't multiples of three!

This theorem is crucial to the algorithm for the following reason: if 2n+1 = 3k for some number k, then if we process the cycle containing 1, it will properly shuffle all numbers that aren't multiples of three. If we then start the cycle at 3, it will properly shuffle all numbers that are divisible by 3 but not by 9. If we then start the cycle at 9, it will properly shuffle all numbers that are divisible by 9 but not by 27. More generally, if we use the cycle shuffle algorithm on the numbers 1, 3, 9, 27, 81, etc., then we will properly reposition all the elements in the array exactly once and will not have to worry that we missed anything.

So how does this connect to 3k + 1? Well, we need to have that 2n + 1 = 3k, so we need to have that 2n = 3k - 1. But remember - we dropped the very first and very last element of the array when we did this! Adding those back in tells us that we need blocks of size 3k + 1 for this procedure to work correctly. If the blocks are this size, then we know for certain that the cycle decomposition will consist of a cycle containing 1, a nonoverlapping cycle containing 3, a nonoverlapping cycle containing 9, etc. and that these cycles will contain all the elements of the array. Consequently, we can just start cycling 1, 3, 9, 27, etc. and be absolutely guaranteed that everything gets shuffled around correctly. That's amazing!

And why is this theorem true? It turns out that a number k for which 1, k, k2, k3, etc. mod pn that cycles through all the numbers that aren't multiples of p (assuming p is prime) is called a primitive root of the number pn. There's a theorem that says that 2 is a primitive root of 3k for all numbers k, which is why this trick works. If I have time, I'd like to come back and edit this answer to include a proof of this result, though unfortunately my number theory isn't at a level where I know how to do this.

Summary

This problem was tons of fun to work on. It involves cute tricks with dividing by two modulo an odd numbers, cycle decompositions, primitive roots, and powers of three. I'm indebted to this arXiv paper which described a similar (though quite different) algorithm and gave me a sense for the key trick behind the technique, which then let me work out the details for the algorithm you described.

Hope this helps!