当您创建的蜂巢外部表与S3位置数据转移?蜂巢、当您、位置、数据

当你创建的蜂巢(Hadoop的)与Amazon S3的源位置外部表转移到当地的Hadoop的HDFS上的数据:

在外部表的创建 当张塌塌米(乔布斯)对外部表上运行 永远(没有数据是不断转移)和MR作业读取S3的数据。什么是这里发生了S3读取的成本?是否有数据转移到HDFS单一的成本或者没有数据传输成本,而且当蜂巢创建的麻preduce工作在这个外部表运行读取成本发生。

这是例子外部表的定义是:

创建外部表MYDATA(密钥字符串,值INT)

行格式分隔的字段TERMINATED BY'='

位置S3N:// mys3bucket /';

解决方案

地图任务将直接从S3读取数据。在Map和Reduce步骤之间,数据将被写入到本地文件系统,与马之间的preduce作业(在需要多个作业查询)临时数据将被写入HDFS。

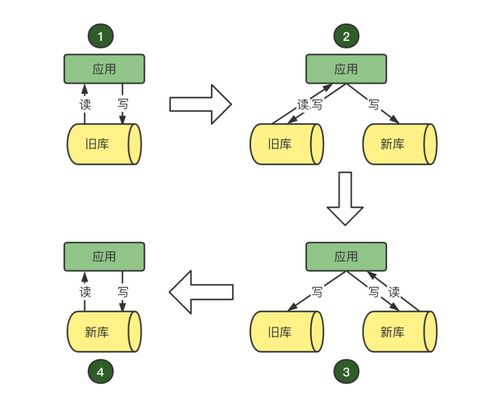

如果您担心S3读成本,它可能是有意义的创建一个存储在HDFS另一个表,并做从S3表一次性拷贝到HDFS表。

When you create an external table in Hive (on Hadoop) with an Amazon S3 source location is the data transfered to the local Hadoop HDFS on:

external table creation when quires (MR jobs) are run on the external table never (no data is ever transfered) and MR jobs read S3 data.What are the costs incurred here for S3 reads? Is there a single cost for the transfer of data to HDFS or is there no data transfer costs but when the MapReduce job created by Hive runs on this external table the read costs are incurred.

An example external table definition would be:

CREATE EXTERNAL TABLE mydata (key STRING, value INT)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '='

LOCATION 's3n://mys3bucket/';

解决方案

Map tasks will read the data directly from S3. Between the Map and Reduce steps, data will be written to the local filesystem, and between mapreduce jobs (in queries that require multiple jobs) the temporary data will be written to HDFS.

If you are concerned about S3 read costs, it might make sense to create another table that is stored on HDFS, and do a one-time copy from the S3 table to the HDFS table.