装载数据(增量)为亚马逊的红移,S3 VS DynamoDB VS插入亚马逊、增量、数据、DynamoDB

我有一个Web应用程序,需要发送关于其使用情况的报告,我想用亚马逊红移作为数据仓库的目的, 我应该如何收集数据?

I have a web app that needs to send reports on its usage, I want to use Amazon RedShift as a data warehouse for that purpose, How should i collect the data ?

每到这时,用户与我的应用程序进行交互,我想向大家报告,..所以,当我应该将文件写入S3?又有多少呢? 我的意思是: - 如果不立即发送信息,那么我可能会失去它作为一个连接的结果丢失,或者一些错误,在我的系统,而它的收集,准备发送到S3 ... - 如果我不将文件写入到S3上的每个用户的互动,我将结束与数百个文件(每个文件都具有最小的数据),这需要管理,排序,之后被复制删除,以红移..这剂量不似乎就像一个好的解决方案。

Every time, the user interact with my app, i want to report that.. so when should i write the files to S3 ? and how many ? What i mean is: - If do not send the info immediately, then I might lose it as a result of a connection lost, or from some bug in my system while its been collected and get ready to be sent to S3... - If i do write files to S3 on each user interaction, i will end up with hundreds of files (on each file has minimal data), that need to be managed, sorted, deleted after been copied to RedShift.. that dose not seems like a good solution .

我在想什么?我应该用DynamoDB,而不是,我应该使用简单的插入到红移,而不是!? 如果确实需要将数据写入DynamoDB,我应该删除保持表之后被复制..什么是最好的做法是什么?

What am i missing? Should i use DynamoDB instead, Should i use simple insert into Redshift instead !? If i do need to write the data to DynamoDB, should i delete the hold table after been copied .. what are the best practices ?

在任何情况下,有什么办法避免红移数据复制的最佳实践?

On any case what are the best practices to avoid data duplication in RedShift ?

鸭preciate的帮助!

Appreciate the help!

推荐答案

这是他们摄取到亚马逊红移前pferred为汇总事件日志$ P $。

It is preferred to aggregate event logs before ingesting them into Amazon Redshift.

的好处是:

您会使用并行红移更好的性质; COPY在S3一套更大的文件(或从大DynamoDB表)会是多不是单独插入一个小文件或复制得更快。

You will use the parallel nature of Redshift better; COPY on a set of larger files in S3 (or from a large DynamoDB table) will be much faster than individual INSERT or COPY of a small file.

可以 pre-排序您的数据加载到红移之前(尤其是如果排序是基于事件的时间)。这也是提高你的负载性能,并减少需要你的表格真空。

You can pre-sort your data (especially if the sorting is based on event time) before loading it into Redshift. This is also improve your load performance and reduce the need for VACUUM of your tables.

您可以聚合并装入红移之前堆积在几个地方你的事件:

You can accumulate your events in several places before aggregating and loading them into Redshift:

本地文件到S3 - 最常见的方法是收集您的日志,客户端/服务器和每X MB或y分钟将它们上传到S3上。但是也有一些支持此功能的许多记录追加程序,你不需要做在code任何修改(例如,的 FluentD 或 Log4J的)。这可以通过只容器配置来完成。不利的一面是,你可能失去了一些日志和这些本地日志文件的上传之前可以删除。

Local file to S3 - the most common way is to aggregate your logs on the client/server and every x MB or y minutes upload them to S3. There are many log appenders that are supporting this functionality, and you don't need to make any modifications in the code (for example, FluentD or Log4J). This can be done with container configuration only. The down side is that you risk losing some logs and these local log files can be deleted before the upload.

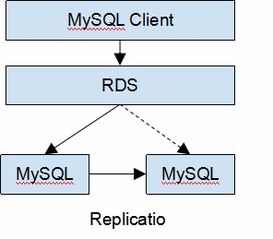

DynamoDB - 如@Swami描述,DynamoDB是积累的事件一个很好的方法。

DynamoDB - as @Swami described, DynamoDB is a very good way to accumulate the events.

亚马逊室壁运动 - 最近发布的服务也以流的好方法从各客户机和服务器的中央位置的事件中快速且可靠的方式。该事件是为了插入,这可以很容易地加载以后pre排序为红移。该事件被存储在室壁运动24小时,你可以安排从室壁运动和装载读数红移每隔一小时,例如,有更好的表现。

Amazon Kinesis - the recently released service is also a good way to stream your events from the various clients and servers to a central location in a fast and reliable way. The events are in order of insertion, which makes it easy to load it later pre-sorted to Redshift. The events are stored in Kinesis for 24 hours, and you can schedule the reading from kinesis and loading to Redshift every hour, for example, for better performance.

请注意,所有这些服务( S3,SQS,DynamoDB和室壁运动的),让您的推从最终用户/设备的事件,直接,而不需要经过一个中间web服务器。这可以显著提高服务的高可用性(如何处理不断增加的负载或服务器故障)和系统的成本(您只需支付你使用什么,你不需要有未充分利用的服务器,只是为了日志)。

Please note that all these services (S3, SQS, DynamoDB and Kinesis) allow you to push the events directly from the end users/devices, without the need to go through a middle web server. This can significantly improve the high availability of your service (how to handle increased load or server failure) and the cost of the system (you only pay for what you use and you don't need to have underutilized servers just for logs).

例如见你如何能得到暂时的安全令牌这里移动设备:http://aws.amazon.com/articles/4611615499399490

See for example how you can get temporary security tokens for mobile devices here: http://aws.amazon.com/articles/4611615499399490

工具,另一个重要的设置为允许直接互动与这些服务是各种人员秒。例如,对于的Java , .NET 的JavaScript ,的iOS 和的Android 。

Another important set of tools to allow direct interaction with these services are the various SDKs. For example for Java, .NET, JavaScript, iOS and Android.

关于重复数据删除要求;在大多数的你上面的选项都可以做,在聚合阶段,例如,当你从一个室壁运动流读取,你可以检查你不必重复你的事件,但分析前把事件的大缓冲到数据存储

Regarding the de-duplication requirement; in most of the options above you can do that in the aggregation phase, for example, when you are reading from a Kinesis stream, you can check that you don't have duplications in your events, but analysing a large buffer of events before putting into the data store.

不过,你可以做此项检查在红移为好。一个好的做法是复制数据到一个临时表,然后的 SELECT INTO 一个良好的组织和分类表。

However, you can do this check in Redshift as well. A good practice is to COPY the data into a staging tables and then SELECT INTO a well organized and sorted table.

您可以实现另一个最佳实践是有一个每日(或每周)表分区。你即使想有一个大的长事件表,但大多数查询都是单日运行(最后一天,例如),您可以创建一组具有相似结构的表(events_01012014,events_01022014,events_01032014 ...)。然后你就可以 SELECT INTO ...其中date = ... 每本表。当你要查询从多天的数据,你可以使用UNION_ALL.

Another best practice you can implement is to have a daily (or weekly) table partition. Even if you would like to have one big long events table, but the majority of your queries are running on a single day (the last day, for example), you can create a set of tables with similar structure (events_01012014, events_01022014, events_01032014...). Then you can SELECT INTO ... WHERE date = ... to each of this tables. When you want to query the data from multiple days, you can use UNION_ALL.