O(M + N)和O(MN)之间的区别?区别、MN

我在尝试通过不同的方法来找到一个算法的复杂性。在数学上,我碰到一个氧(M + N),另一个O(MN)的方法。但我无法掌握或说想象这一点。它不象我看着他们,并得到了啊!这是怎么回事的感觉!用自己的例子,或任何其他工具,有人能解释一下吗?

解决方案我寻找直觉的建议被认为是实验如下:

首先,认识到,m和n是对输入的两个不同的测量的。它们可能是两个输入流的长度,以矩阵的边的长度,或它们的数据结构的两个不同的属性,例如边缘,并且在同一图的节点数,或任何类似的措施的计数。

的直觉是大O EX presses绑定上的真正的运行时间(或如比较计数或需要空间一些其他方面)的算法在一个简单的术语功能 - 调用的是R(M,N) - 乘以一些任意常数。我们忽略了常数因子,并认为由相同的R-界作为一个家庭所有的算法通过调用它们的运行时间O(R(M,N))。

因此,大O(M + N)说,真正的运行时间由一些函数R(M,N)= C(M + N)为适当大的m和n为界。为图形例如,这表示该算法的实际运行时间由顶点和边的数目的总和的倍数为界。

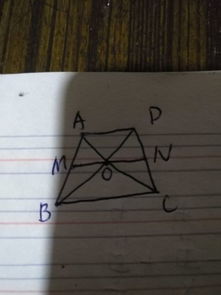

您能想到的边界功能在3D图形与坐标轴米,n和R(M,N)。或者,你能想到的图表:

R(M,N)= M + N

--------------

m = 1时2 3 4

n = 1的1 2 3 4

2 3 4 5 6

3 4 5 6 7

4 5 6 7 8

有关R(M,N)= MN,你有

R(M,N)= MN

--------------

m = 1时2 3 4

n = 1的1 2 3 4

2 2 4 6 8

3 3 6 9 12

4 4 8 12 16

作为一个三维曲线图,所述第一函数是一个平面,第二个是更快增长函数在几乎所有点。这意味着,如果m和n增长足够大,一个O(MN)结合最终会更大(相当于一个潜在的慢的程序)比O(M + N),因为该常量变得无足轻重了。

有关快速增长的成本的一个例子,假设一个O(M + N)的算法有3在其运行时绑定(从而可能在比较两种算法上面的小本投入非常慢),一个额外的常数因子:

R(M,N)= 3(M + N)

--------------

m = 1时2 3 4

N = 1 3 9 12 15

2 9 12 15 18

3 12 15 18 21

4 15 18 21 24

因此,在O(M + N)看起来像它的约束比O(MN)一个在上图中限制较少。但是看的情况下M = N = 100。这里结合在O(M + N)的算法是3(M + N)= 600。但是,O(MN)算法与小常数已结合MN = 10000显然要如果m和n的大的第一个。

@Anonymous提出在这篇文章中,这混淆大O和大西塔的初始版本,细点。大O仅涉及边界或上限的在数量上被测量。例如,这意味着的每次的O(n)的算法也为O(n log n)的,并为O(n ^ 2)。如果实际运行时间由增长较慢的功能为界,它也有界由所有增长更快的那些。

然而,这是很常见的人说:这个算法是O(n),而这意味着绑定是的紧张的。也就是说,Cn为上部结合在运行时间为一些常数C和Dn也是下界一些其他常数D(和适当大的n)的。这种紧密的绑定正确表述为西塔(N),而不是为O(n)。一个西塔(R(M,N))算法是(粗略地讲)成正比,R(M,N)。

的运行时间我会添加最后,有很多情况下,你不能忽视的常量。存在着大量的算法在文献中是渐近比常用的更快,但有常数太大,以致于实际问题尺寸他们总是太慢。计算几何有很多例子。基数2排序是另一回事。这是西塔(N),但在实践中一个很好的快速排序(西塔(N log n)的平均情况)将在规模高达数组打败它至少达到10 ^ 8的整数。

I was trying to find the complexities of an algorithm via different approaches. Mathematically I came across one O(m+n) and another O(mn) approach. However I am unable to grasp or say visualize this. It's not like I look at them and get the "Ahh! That's what's going on" feeling! Can someone explain this using their own examples or any other tool?

解决方案My recommendation for finding intuition is thought experiments as follows:

First, realize that m and n are two different measurements of the input. They might be the lengths of two input streams, the lengths of sides of a matrix, or the counts of two different attributes of the same data structure, such as edge and node count of the same graph, or any similar measures.

The intuition is that big-O expresses a bound on the true run time (or some other aspect such as comparison count or space needed) of an algorithm in terms of a simple function - call that R(m, n) - multiplied by some arbitrary constant. We ignore the constant factors and think of all algorithms bounded by the same R as a family by calling their run times O( R(m, n) ).

Consequently, big O(m + n) says that the true run time is bounded by some function R(m,n) = C(m + n) for suitably big m and n. For the graph example, this says that the actual run time of the algorithm will be bounded by a multiple of the sum of the number of vertices and edges.

You can think of the bounding function as a graph in 3d with axes m, n, and R(m,n). Or you can think of charts:

R(m,n) = m + n

--------------

m= 1 2 3 4

n=1 1 2 3 4

2 3 4 5 6

3 4 5 6 7

4 5 6 7 8

For R(m,n) = mn, you have

R(m,n) = mn

--------------

m= 1 2 3 4

n=1 1 2 3 4

2 2 4 6 8

3 3 6 9 12

4 4 8 12 16

As a 3d graph, the first function is a plane and the second is a much faster-growing function at almost all points. This means that if m and n grow large enough, an O(mn) bound will ultimately be larger (corresponding to a potentially slower program) than an O(m+n) because the constants become insignificant.

For an example of the cost of rapid growth, suppose an O(m+n) algorithm has an extra constant factor of 3 in its runtime bound (making it potentially very slow on small inputs compared to both algorithms above):

R(m,n) = 3(m + n)

--------------

m= 1 2 3 4

n=1 3 9 12 15

2 9 12 15 18

3 12 15 18 21

4 15 18 21 24

So the the O(m + n) looks like it's bound is less constrained than the O(mn) one in the chart above. But look at the case m=n=100. Here bound on the O(m + n) algorithm is 3(m + n) = 600. But the O(mn) algorithm with the small constant has bound mn = 10000. Clearly you want the first if m and n are large.

@Anonymous raised a fine point on the initial version of this article, which confused big-O and big-Theta. Big-O only deals with bounds or upper limits on the quantity being measured. For example, this means that every O(n) algorithm is also O(n log n) and O(n^2). If the real run time is bounded by the slower-growing function, it is also bounded by all faster-growing ones.

Yet it is quite common for people to say "this algorithms is O(n)" while meaning that the bound is tight. That is, that Cn is an upper bound on the run time for some constant C and Dn is also a lower bound for some other constant D (and suitably large n). Such a tight bound is properly stated as Theta(n), not O(n). The run time of a Theta(R(m, n)) algorithm is (roughly speaking) proportional to R(m, n).

I'll add finally that there are many cases where you can't ignore constants. There exist lots of algorithms in the literature that are asymptotically "faster" than those in common use, but have constants so large that for practical problem sizes they are always too slow. Computational geometry has many examples. Radix 2 sort is another. It's Theta(n), but in practice a good quicksort (Theta(n log n) average case) will beat it on arrays of size up to at least 10^8 integers.